Last week, I talked about Moloch, the evil god of child sacrifice, as a metaphor for unhealthy competition — “race to the bottoms”, prisoner’s dilemmas, and coordination problems generally.

We summon Moloch when competitive pressures require us to optimize for narrow rewards, especially ones that are easily measurable or “legible” while dispensing with the wider range of values that resist measurement.

Putting something of a face to the phenomenon is useful. It’s adaptive to recognize Moloch so we can re-direct our blame from individuals to the system. We are collectively in charge of the system. We need to recognize the high leverage nodes and rules within that system, not only to protect them from tampering, but to make sure they make sense for our communal well-being in the first place.

Our primary levers are incentives. They take many forms. They can be hard like carrots and sticks or soft like status and honor. I think of culture as the sum of these incentives. This is apparent if you tried to decompose subcultures, national identities, or the values of corporations into what makes their members tick. That which makes one rise or fall within the confines of a specific organization or tribe.

I said the comet in Don’t Look Up should have been named Moloch. But I don’t want to leave this topic making you feel I double-clicked on apocalypse.exe. I promised a lifeline, so this week I’ll give you some materials that point us in the right direction.

I’ll start with an interview with poker player Liv Boeree. While the entire conversation she has with host, Alexander Beiner, is provocative infotainment dealing with game theory and culture, she is obsessed with the Moloch metaphor. I love the race-to-the-bottom example she dwells on: Instagram beauty filters and their effect on teenagers (or really everyone) on social media. She herself finds the temptation to use them quite powerful.

Here are more excerpts that caught my attention:

Moloch’s unhealthy optimization embodied by the “Paperclip Maximizer”

A byproduct of [unhealthy competition] is that if you play it out to its logical conclusion, it means that you will turn basically everything in the universe into this one thing. The ultimate instantiation of that is the “Paperclip Maximizer”. This is a thought experiment, I think by Eliezer Yudkowsky of how a superintelligent AI could go wrong. Suppose it’s unbelievably good at getting whatever it wants done, done. But it's stupid to the extent that it was basically programmed to do this one narrow thing, which in this instance, you wanted to make paperclips. You wanted the AI to make more paperclips better than what you can currently do. It’s a paperclip maker, but because it's so unbelievably good, it turns everything from the factory it's in [into paperclips]. It figures out how to pull the constituent parts of atoms, the blood, the hemoglobin in your blood, the iron. It extracts and dismantles until it can tie anything in the universe into paperclips.

How maximizing can “dismantle the universe into a low complexity state”

This is analogous a little bit to the heat death of the universe. Because that is actually a very low complexity state. it's just a homogenous grey soup. The universe started out with very low complexity, a singularity of matter and energy. If we're talking in terms of Kolmogorov complexity, which is basically, “how many bits of code do you need to describe a thing?” The universe started out pretty simple. Then time started, things started unfolding and suddenly we started seeing hydrogen and then helium and that coalesced into stars, which could have created greater heavier elements. All this beautiful complexity started emerging. Patternicty is like a dance between order and disorder. A bit of hierarchy, but a bit of anarchy. This creates this highly complex, dynamic system that's very hard to describe. To write the piece of code to describe the universe, you basically have to just create the universe. That's what a highly complex system is.

But at some point, the stars will die out and so on. All this sort of free energy that is used to create all this complexity will start dissipating. And then it'll slowly as far as we know, turn into this gray soup, which has low complexity. Entropy will do this over time.

Never thought of this as good vs evil!

This is like what a paperclip maximizer would do. It's permanently curtailing. There's no more complexity to rise. The universe has reached this steady state. And that seems like a tragedy of enormous potential because, at least up until now, it seems like the universe is trying to emerge into greater and greater states of complexity. So I hate to boil it down to like good and evil terms, but to me good is that which creates, allows for greater emergence and complexity to appear and thereby utility. Useful information that we can process and make wonderful things with.

And evil is that which does the opposite. It turns things into a low diversity, very basic situation, whether it's a cloud of hydrogen or as Ginsburg describes [in the Howl poem] Moloch, who is a cloud of “sexless hydrogen”. So it's like this force of entropy, but it's slightly different because entropy is actually neutral. Entropy is just like time effectively. Whereas Moloch is the thing that turns everything into this like one modern focus, sacrificing everything in order to win this one thing — hence the child sacrifice.

The role of healthy competition

Competition can also be an enormous force for good. The capitalistic model has risen the world to what it is right now. We would not be living the cushy life with a lot of the luxuries that capitalism has provided.

How technology fits into this

Technology is exponential, making Moloch’s life much easier to destroy the universe. But at the same time, we're also building technologies that enable cooperation to better coordinate with one another.

My takeaway

Moloch is the evil that comes from overly narrow optimization in service of a competition that has lost the script. Coordination problems devolve into sub-optimal equilibria that are difficult to escape from.

If there is a remedy, I suspect it’s a mix of the following (I included readings I really enjoyed for each prong):

Surplus/redundancy/slack in a system to relieve the pressure to slide into counterproductive or sociopathic competitions

✎ Studies on Slack (26 min read)by Scott Alexander

✎ Greedy Algorithms And The Need For Illegibility (6 min read)

by Rohit Krishnan

✎ Casualties of Perfection (4 min read)by Morgan Housel

Cooperation

✎ An Ode To Cooperation (7 min read)

by Matt Hollerbach

(this lightly quantitative take on cooperation also underpins portfolio theory!)Appreciating the limits of legibility

Moloch sustains itself from unhealthy competition. What makes a competition unhealthy is when it becomes all-consuming by compressing our values into a narrow band like the paper clip AI. The AI must process structured and unstructured data, but its meta-intelligence needs to make choices directed by goals.

I can’t see how “goals” in any holistic sense of the word can be a solved problem. How can we escape the simple trope “Not all that we measure matters, and not everything that matters can be measured”?

We impose legibility, then recursively use the legibility to make inferences that further cement that what we originally measured was important otherwise we wouldn’t have measured it. There’s a circularity that makes us vulnerable to mutated assumptions. Sometimes, it’s a good idea to climb up into a Moontower with some friends, take a pause from the battle, and use that calm, high vantage point to wonder, “which unsaid assumptions bear more weight than they should? And how did that happen?”

When you’re feeling open let yourself wander into this essay by Tom Morgan:

✎ The Most Interesting Thing I’ve Ever Read (25 min read)

(If you find the title clickbaity, congrats — you understand Moloch!)

I mentioned how Moloch is a force that sacrifices values that are hard to measure. Tom’s essay will bridge you into a sea of thinking that deliberates both the power of science and its limitations (at least in its current state) as a means to receive knowledge.

[also, thanks to Tom for pointing me to a number of sources that I read after the original Moloch post]

A Final Indulgence

I’ll stretch a bit here.

Moloch feeds by forgetting that the objects of our desire are actually proxies for what we need. We feed our egos with sex, money, and status to gain control. We impose legibility to gain control. Is control freedom? Or is control safety (i.e. the freedom from fear)?

Competition feels like it turns unhealthy when it becomes about control. The illusion of control is it promises both freedom and safety. The control doesn’t mutually scale. For there to be a controller, there must be a controlled.

The trade-offs between freedom and safety, individualism and community feel very stark today. If it feels binary, it’s because the prevailing narratives have profited from framing these values in such divisive terms. The tension between these values is natural, but our sensation of the tension might be amplified artificially.

I’m not downplaying the trade-off so much as trying to restore its grey hue. I’m not well-equipped to do so, but I urge you to listen to war journalist and author Sebastian Junger’s interview with Russ Roberts. The stories within are engaging but the nuanced discussion, trading off between freedom and safety, is the payoff. I feel like the word “freedom” is a political football that has been turned into a cartoon and this interview’s depth makes it quite apparent.

Money Angle

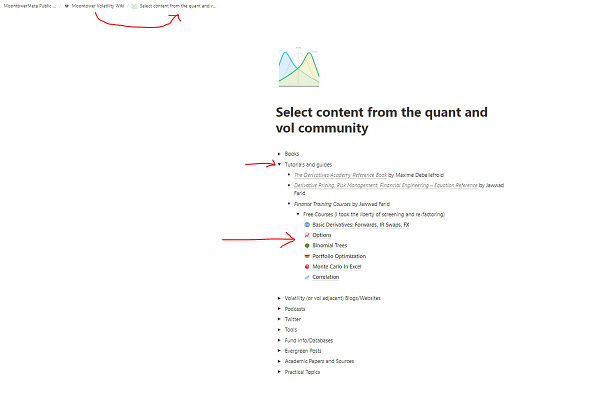

Moontower reader Jeff sent me a treasure trove of free technical course materials to add to the volatility wiki.

Here’s a thread explaining what you’ll find and where to find it:

Last Call

Last month Disney+ in partnership with National Geographic released the documentary about the Thai boys that were trapped in a cave in June of 2018.

The bravery and ingenuity on display will push your conception of what humans can do when they cooperate.

We watched it with the kids. The gripping footage and editing (same team as Free Solo) honored the miracle that was: